For years, watching a movie or a TV series was a relatively simple act: you sat down, faced one screen, and gave it most of your attention. That assumption no longer holds.

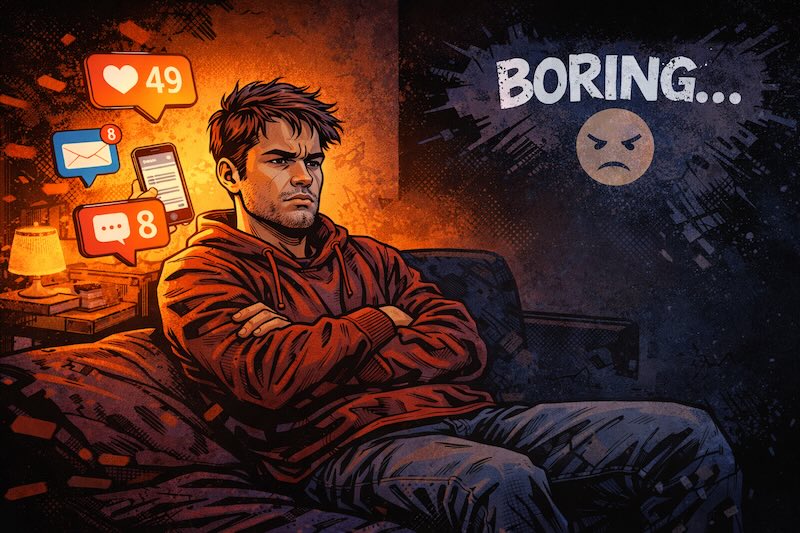

Today, audiovisual consumption almost always happens alongside another device, usually a phone. While a series plays in the background, notifications arrive, timelines refresh, messages demand replies. This phenomenon is often called the second screen, and it has quietly reshaped how stories are written, produced, and consumed.

What is the “Second Screen”?

The idea is straightforward: when people sit down to watch something on platforms like Netflix, Prime Video, or Disney+, there is often another screen competing for their attention, their smartphone.

Studies consistently show that this behavior is especially common among younger audiences (around 60%), but it is far from exclusive to them. Many adults also watch with one eye on the TV and the other on their phone. Attention is no longer singular; it is split by default.

A telling observation from The Guardian

A few months ago, The Guardian published an article that captured this shift particularly well. The piece reported that Netflix had begun encouraging certain types of content designed for “casual viewing”—shows meant to be followed even when viewers are not fully paying attention.

According to the article, some screenwriters were asked to have characters explicitly announce what they are doing, so that viewers who are mostly focused on their phones can still keep track of the plot. If the TV becomes the secondary screen, the story should not demand enough effort to pull attention away from the primary one.

In other words: if your phone is your main screen, the show should not challenge you so much that you feel the need to stop scrolling.

This is not necessarily a conspiracy to “dumb down” audiences. It is better understood as a set of incentives:

- Improve retention

- Optimize engagement metrics

- Compete more effectively within an overcrowded streaming market

Clarity, repetition, and constant reminders become rational design choices.

Why this matters

For platforms and writers

When attention is fragmented, content tends to evolve toward being scroll-proof:

- Dialogue that explains what is happening

- Gentle repetition of key information

- Rhythms built around frequent “peaks” to recapture attention

For viewers

There is a risk here. If everything is designed for distracted consumption, storytelling can become flatter, more explicit, and less demanding. The danger is not that we lose “great art” overnight, but that subtlety, ambiguity, and silence slowly disappear.

The Teletubbies of one era may very well be the Shakespeare of another.

The real competition

This raises an important question: what are TV series actually competing against?

Are they competing with other series? Or are they competing with social media platforms whose entire business model is optimized around capturing and retaining attention?

If the competition is with social networks, then the playing field is uneven. A film competing with another film is not the same as a film competing with an infinite stream of notifications, likes, and algorithmically tuned stimuli. One is cultural competition; the other is closer to digital pharmacology.

This leads to another question: if cultural consumption habits change, should cultural products change with them? Or are we smuggling deeper assumptions into that adaptation?

A dangerous equation

I think many of these changes orbit around an implicit equation:

Stimulation = EntertainmentAnd, by contrast:

No stimulation = BoredomRepeated often enough, these equations start to feel obvious.

If I am not being stimulated, I must not be entertained. Visual stimulus becomes the sole measure of engagement, and entertainment loses its conceptual depth.

Why the Equation Fails

Social media floods us with stimuli to achieve its goals. If we accept that stimulation equals entertainment, then the phone will always win. It will not only feel more stimulating, but also be judged as more entertaining, even when the experience itself is shallow or forgettable.

Think about genuinely entertaining moments in your life. Very few of them involve replying to a stranger on social media. Entertainment, at its core, is not purely sensory. It may rely on sensory input, but it also involves meaning, memory, reflection, and emotional resonance.

Stimulation is not the same thing as entertainment.

The more dangerous half

The second equation is even more troubling:

No stimulation = BoredomReal boredom can be painful, there is no denying that. But lack of stimulation is not the same as boredom. In fact, it can be the condition for some of our most valuable mental processes.

When there is no room between stimulation and boredom, there is no space for:

- Focused thought

- Conceptual depth

- Reflection

- Creative wandering

Fear of boredom can trap us in a conceptual flatland, jumping endlessly from one stimulus to the next, never staying long enough to think deeply about anything.

Ironically, this fear is historically recent. In other eras, boredom was common and unavoidable. Waiting an hour in a plaza without knowing whether someone would arrive was normal. Today, that silence feels intolerable.

Conclusion

We should not fear boredom, but even more importantly, we should not fear non-stimulation.

Yes, boredom can appear if stimulation is absent for long enough. But before that point, there exists a rich territory of thought, imagination, and mental depth. If we never give ourselves time to enter that space, we may reach the end of the day having consumed endlessly, yet thought very little.

That should not be our default exit.

So here is a small invitation:

put your phone down and dive into non-stimulation.

Let’s see what non-stimulation has to offer.

Leave a Reply